Case studies

AI-Powered Parsing & Brand Monitoring Case Study | Flexi IT’s Scalable Solution

The client required monitoring articles on a prominent website to track mentions of their brand.

Introduction

Our goal was to implement the most effective parsing process for articles on a well-known website, preserving both textual content and associated images for subsequent analysis, while specifically ignoring live streaming content.

Challenge

The client required monitoring articles on a prominent website to track mentions of their brand. Additionally, an automated solution was necessary to identify whether these mentions occurred in a positive or negative context.

Solution

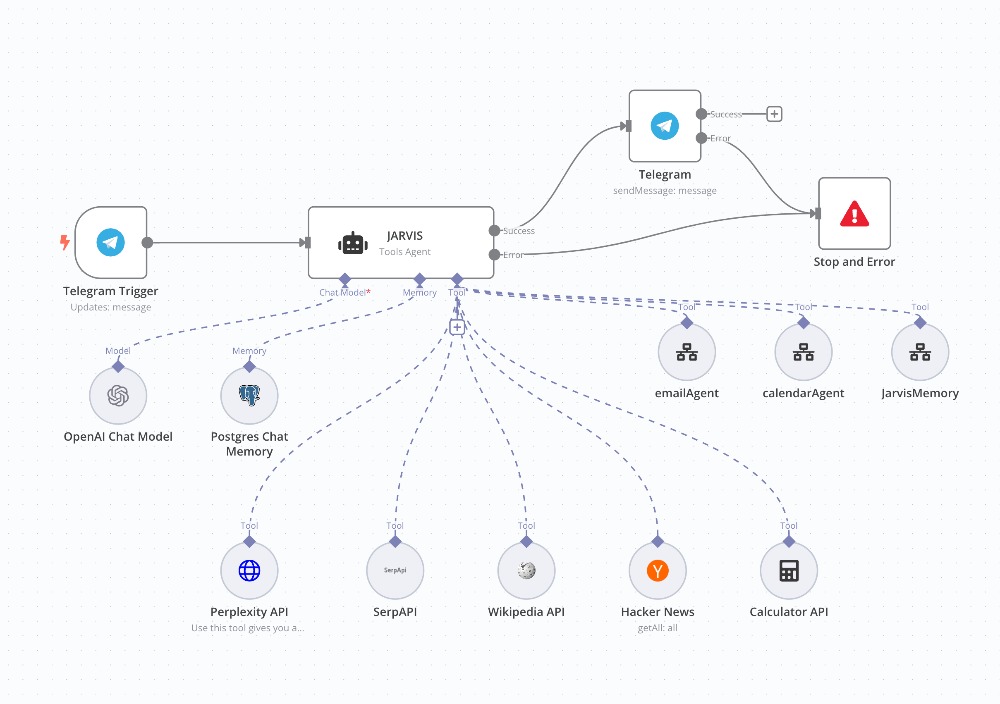

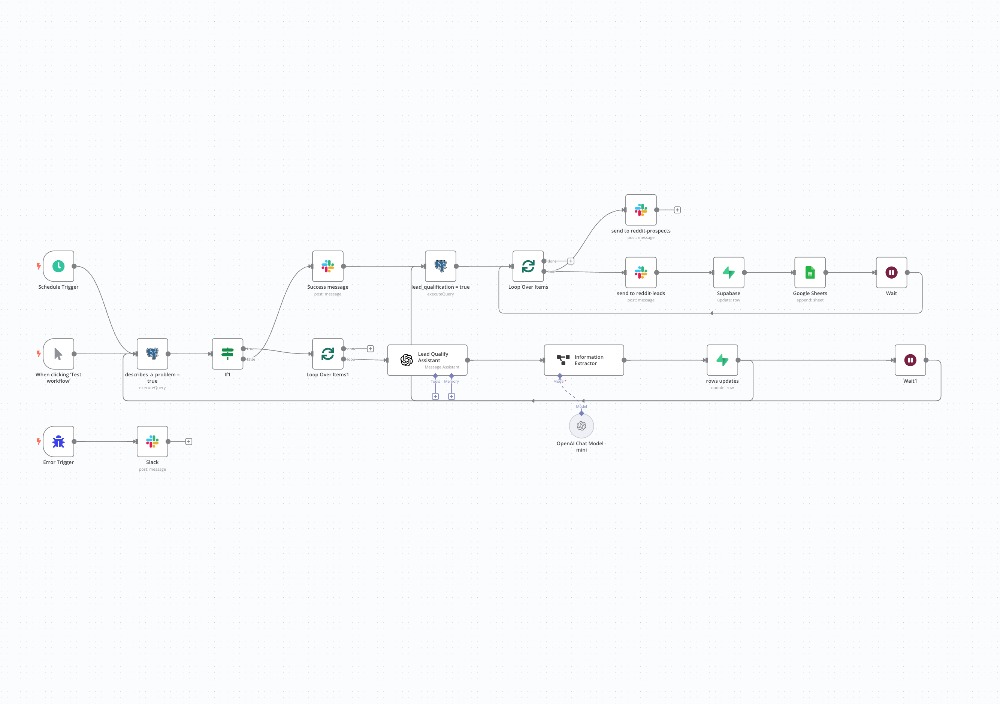

We developed a custom parser designed to extract content from articles on the target website, with the capability to analyse the content for specified keywords and determine their context.

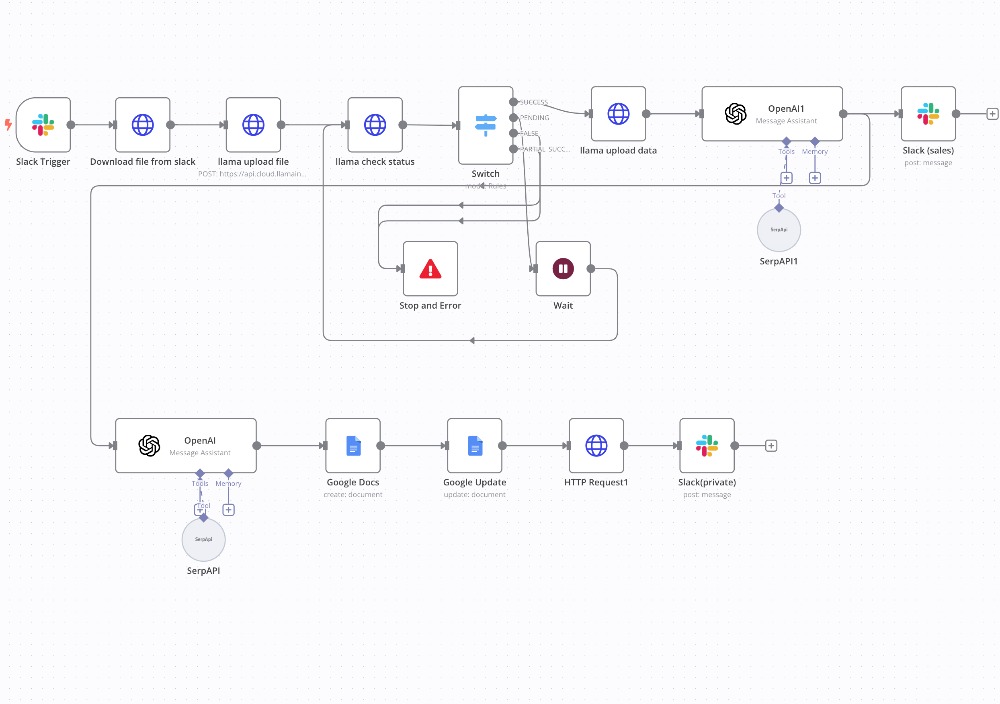

Parsing Process

- Link Collection: Scanning the website and selecting relevant URLs.

- Article Crawling: Extracting text and images while deliberately skipping live streams.

- Filtering: Keyword detection within the article text.

- Data Storage: Efficiently saving data into a database and caching where necessary.

- Context Detection: Utilising advanced AI/ML tools for accurate sentiment analysis.

- Error Handling: Robust logging mechanisms with automated notifications for error tracking.

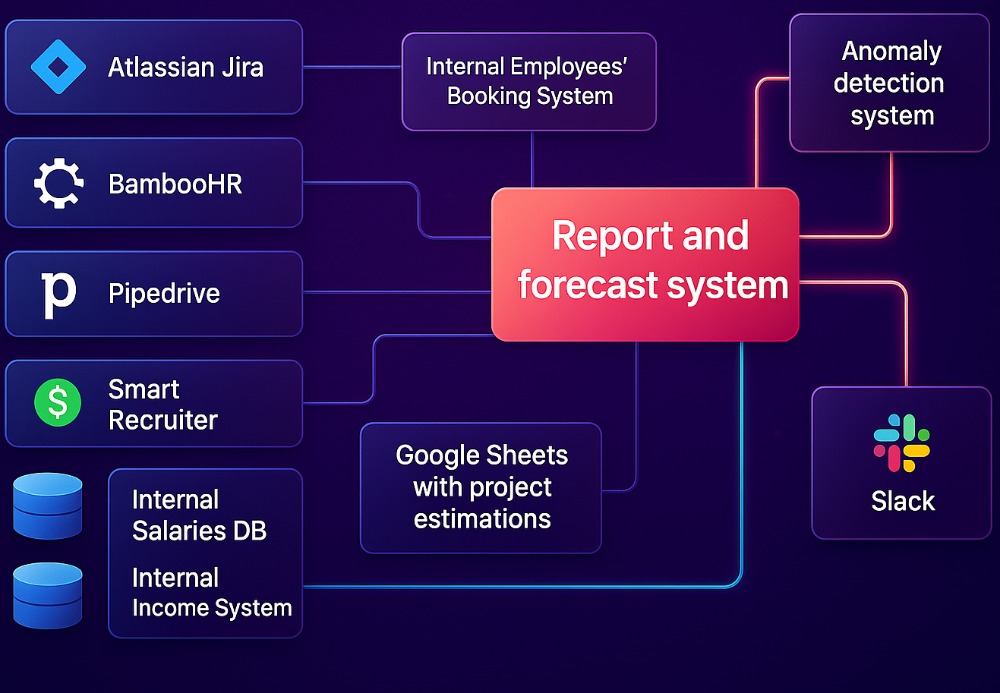

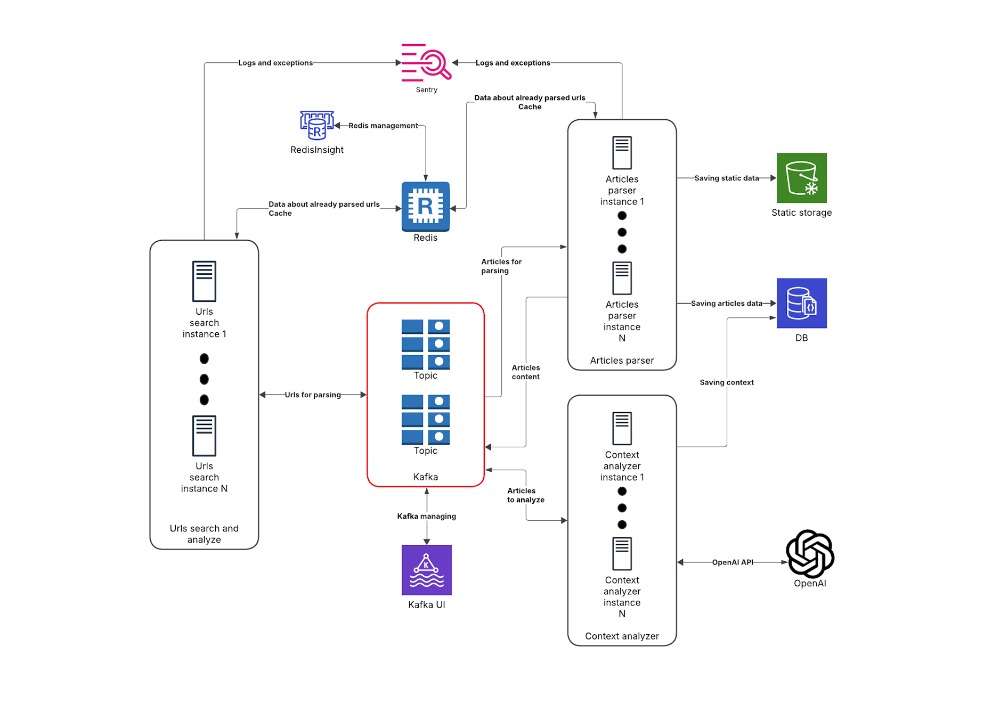

Architecture

We selected a microservices architecture for optimal scalability and modular expansion capabilities. The architecture includes:

- URL Collector Service: Gathers links to relevant articles.

- Article Parser: Processes the articles and extracts necessary data.

- Context Analyzer: Determines sentiment around keyword mentions.

- Database: Stores collected data.

- Cache System: Provides rapid data access.

- Message Broker: Facilitates communication between microservices.

- Error Tracking: Centralised logging and issue reporting.

Source Code

The project's codebase is accessible here: GitLab Repository

Technology Stack

Programming Language:

- Python

Databases:

- MongoDB

- Redis (enhanced with RedisInsight for improved monitoring)

Cache:

- Redis (monitored with RedisInsight)

Message Broker:

- Apache Kafka (monitored with KafkaUI)

Version Control and CI/CD:

- GitLab

Error Tracking and Logging:

- Sentry

Virtualisation:

- Docker

- Docker-compose

Code Quality Tools:

- flake8, mypy, black, ruff, dlint

- pytest

Python Libraries:

- urllib3

- requests

- loguru

- kafka-python-ng3

- python-dotenv

- pydantic

- sentry-sdk[loguru]

- beautifulsoup4

- redis

- pymongo

- openai

Outcomes and Benefits

The technologies implemented have created an efficient, scalable system for parsing articles:

- Python, combined with powerful libraries like BeautifulSoup, facilitated reliable data extraction and asynchronous processing.

- MongoDB provided flexible, scalable data storage capabilities essential for managing large volumes of information

- Redis improved system performance significantly by acting as a cache, reducing database load, and ensuring fast data access.

- Apache Kafka effectively handled inter-service messaging, enhancing system reliability and scalability.

- Sentry enabled rapid error detection and resolution, contributing to system stability.

- Docker and docker-compose simplified system deployment, ensuring isolation of components and streamlining testing and integration processes.

- Code Quality Tools ensured consistently high standards of code, proactively addressing potential issues during development.

The resulting system efficiently meets the client's requirements for monitoring and parsing, providing robust scalability for dynamic workload adjustments. Operational management has been substantially simplified through the integration of monitoring tools such as RedisInsight and KafkaUI.

Try us for 14 days

Want to start a 2-week free trial period with us? Leave your email below and we'll revert to you shortly with more details