Your manufacturing data needs a hierarchical structure built on ISA-95 standards. That means consistent naming conventions, standardised formats (JSON for APIs, time-series databases for sensor streams), and a clean split between real-time operational data and batch business data. Want an IT company to implement automation with n8n or Python? Give them accessible API endpoints. Documented schemas. A unified namespace connecting shop-floor kit to enterprise applications. Without these foundations, even brilliant engineers will struggle.

In this guide, we'll cover:

- The ISA-95 data hierarchy and why it matters for automation

- How to structure schemas and naming conventions

- Real-time vs batch data: choosing the right approach

- Unified Namespace (UNS) architecture for modern factories

- Specific requirements for n8n workflow automation

- Python integration patterns and best practices

- Common data organisation mistakes that derail automation projects

Why Data Organisation Makes or Breaks AI Integration for Manufacturing

Let's be blunt. Most manufacturing automation projects don't fail because someone wrote destructive code. They fail because the data is a mess. Your IT partner walks into the factory expecting structured information and finds a sprawl of proprietary protocols, naming conventions that change from shift to shift, and data silos that stopped communicating sometime around 2014. Nobody planned it this way. It just happened.

Fast Fact: The AI in manufacturing market is growing at 35.3% annually, projected to reach €145 billion by 2030. Yet only 13.5% of EU enterprises have adopted AI—mainly because their data isn't ready for it.

There's good news, though. European manufacturers sit ahead of their American counterparts on this front. Roughly 48% of European manufacturing firms already run big data and AI applications—compared to just 28% across the pond. The raw capability exists. What's missing? Organisation. Structure. A plan that makes sense to humans and machines alike.

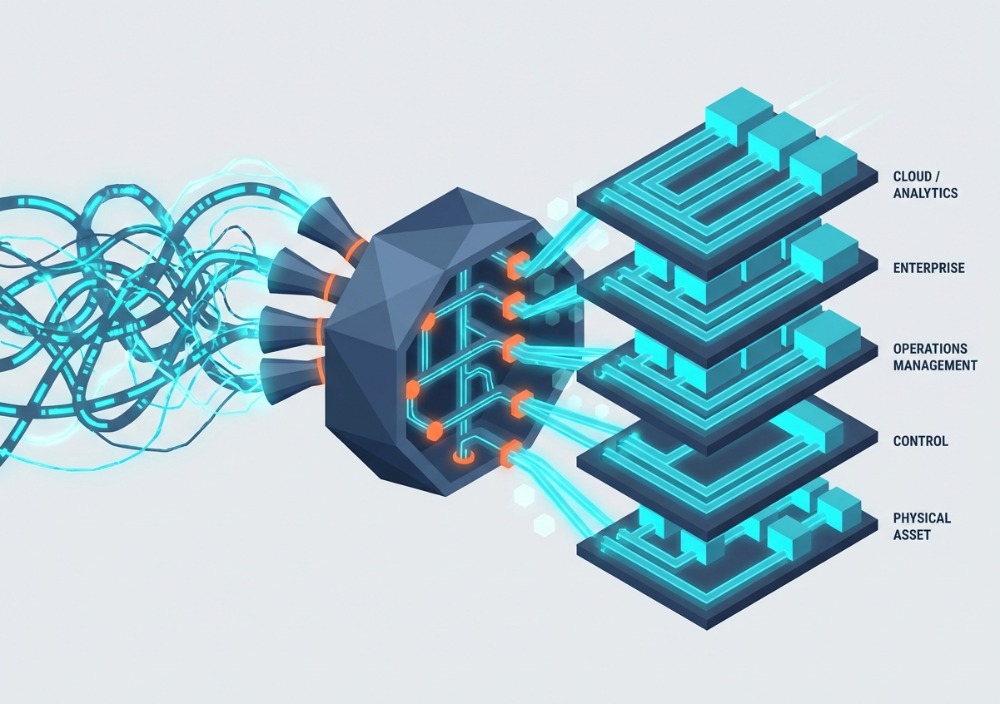

Understanding the ISA-95 Data Hierarchy

ISA-95 got a refresh last year. The April 2025 update (ANSI/ISA-95.00.01-2025) gives manufacturers a universal framework for structuring production data. Think of it as Esperanto for factories—a common language linking your shop floor to the boardroom, regardless of which vendor built what system.

The hierarchy breaks down into five levels:

| Level | Name | What Lives Here | Data Characteristics |

|---|---|---|---|

| 0 | Physical Process | Actual production equipment, raw materials | Physical state, no digital data yet |

| 1 | Sensing & Manipulation | Sensors, actuators, PLCs | Millisecond-level, high volume |

| 2 | Monitoring & Control | SCADA systems, HMIs | Second-level aggregation |

| 3 | Manufacturing Operations | MES, quality management | Minute-to-hour granularity |

| 4 | Business Planning | ERP, supply chain systems | Daily/weekly business metrics |

For n8n and Python work, you'll spend most of your time at Levels 2-4. Level 1? Too granular. Too fast. That's territory for dedicated industrial control systems, not workflow automation tools trying to orchestrate business logic.

What Does This Mean Practically?

Before handing anything to an IT company, map each data source to its level. Temperature sensor pinging every 100 milliseconds? Level 1. Aggregate it first—don't pipe raw streams into automation workflows. Production batch completion flags from your MES? Level 3. Perfect for triggering n8n workflows. Sales orders sitting in your ERP? Level 4. Ideal fodder for Python analytics scripts. The level dictates the treatment.

Schema Design: The Foundation Your Automation Partner Needs

Bad schema design kills projects quietly. No dramatic failure. Just weeks of confusion, rework, and mounting frustration. Before any IT partner can build useful workflows, they need to understand your data structure. And that structure must stay consistent. Everywhere. Always.

Naming Conventions That Actually Work

Pick a naming scheme encoding asset type, location, and unique identifier. Stick with it. Examples:

- CNC-LINEA-001 First CNC machine on Line A

- TEMP-OVEN-B-003 Third temperature sensor in Oven B

- PROD-BATCH-2026-01-1542 Production batch from January 2026

Extend this convention everywhere. Database tables. API endpoints. Workflow variables. When maintenance engineers, operations managers, and external IT partners all read "CNC-LINEA-001" and picture the same machine, you've eliminated an entire class of integration headaches. Simple? Yes. Easy to enforce? Surprisingly difficult. Worth the effort? Absolutely.

The Three-Layer Schema Architecture

For AI-driven production systems, we recommend a layered approach:

- Raw Data Layer: Accept incoming data in its native format without transformation. This preserves complete information for reprocessing as your understanding evolves.

- Normalised Layer: Apply dimensional modelling—fact tables containing measurements (production counts, temperatures) connected to dimension tables (machine info, product specs, time periods).

- Presentation Layer: Optimised views and aggregations for specific queries. Your dashboards pull from here, not from raw data.

Star schemas shine in manufacturing contexts. Picture a central fact table holding timestamped production measurements, with spokes connecting to dimensions: machines, products, operators, quality standards, shifts. This setup lets you calculate KPIs like Overall Equipment Effectiveness (OEE) without wrestling your database into submission every time someone asks a question.

Real-Time Streaming vs Batch Processing: Which Do You Need?

Not everything needs real-time processing. Really. Some data can wait an hour. Some can wait a day. Recognising the difference saves money and prevents the over-engineering that makes systems brittle and expensive to maintain.

Fast Fact: The industrial edge computing market is projected to reach €330 billion by 2027, driven by manufacturers' need to process time-sensitive data locally rather than sending everything to the cloud.

When to Use Real-Time Streaming

- Equipment health monitoring where anomalies require immediate response

- Quality control decisions that must happen before a part moves to the next station

- Safety-critical alerts (temperature spikes, pressure anomalies)

MQTT dominates real-time factory communications now. It runs on a publish-subscribe model—sensors push data to topics such as factory/lineA/oven/temperature, and any authorised system subscribes to the topics it needs. Clean. Efficient. No polling overhead.

OPC UA adds semantic muscle. Instead of transmitting a naked "45," OPC UA packages context: "45 degrees Celsius, boiler sensor, 98% confidence score." Over 45 million automation products support OPC UA. Compatibility concerns? Essentially none.

When Batch Processing Is Sufficient

- Daily production reports and KPI calculations

- Inventory synchronisation between MES and ERP

- Historical trend analysis for continuous improvement

- Regulatory compliance reporting

For these scenarios, hourly or daily extractions work brilliantly. Simpler to build. Easier to debug. Cheaper to run. Don't let anyone convince you that everything must stream in real time. That's expensive ideology, not engineering wisdom.

The Unified Namespace Approach: A Modern Alternative

Classical ISA-95 pushes data upward through layers. Sensors feed SCADA. SCADA feeds MES. MES feeds ERP. Each layer polls the one beneath it. Functional? Yes. Elegant? Not particularly. The approach creates bottlenecks and bakes in latency that modern operations struggle to tolerate.

Unified Namespace (UNS) inverts the model entirely. Every system publishes to a central broker. Every authorised consumer subscribes. Hub-and-spoke architecture. One source of truth for the factory's current state. No more chasing data through five layers to answer a simple question.

Key Benefits for Automation

- Reduced bandwidth: Data only transmits when something changes (Report-by-Exception)

- Easier integration: New applications subscribe to existing topics without modifying source systems

- Consistency: Everyone sees the same data at the same time

Starting fresh? Planning a major upgrade? UNS architecture makes n8n and Python integration dramatically simpler. Your IT partner connects to the MQTT broker once and immediately sees everything. No hunting through documentation. No negotiating access with three different system owners. Just data, available and consistent.

Preparing Your Data for n8n Automation

n8n blends visual workflow design with real coding flexibility. You drag nodes around a canvas, but you can also drop into JavaScript or Python when the pre-built components fall short. For manufacturing—where you're stitching together ancient MES systems, modern cloud services, and everything between—this hybrid approach proves invaluable.

What n8n Needs From Your Data

- API Access: n8n's HTTP nodes require REST APIs with proper authentication. If your MES only supports SOAP or proprietary protocols, you'll need a middleware layer.

- JSON Format: n8n passes data between nodes as JSON objects. Systems that output CSV or XML will require transformation nodes.

- MQTT Connectivity: For real-time triggers, n8n can subscribe to MQTT topics directly. Ensure your broker is accessible from wherever n8n runs.

- Webhook Endpoints: Many workflows trigger from incoming webhooks. Your MES or SCADA system should be able to POST events to external URLs.

Common n8n Manufacturing Workflows

| Workflow Type | Trigger | Actions | Complexity |

|---|---|---|---|

| Production Sync | Scheduled (hourly) | Extract MES data → Transform → Load to data warehouse | Low |

| Quality Alert | MQTT message | Check threshold → Route to Slack/Email → Log incident | Medium |

| Predictive Maintenance | Sensor data | Extract features → Call ML model → Route based on risk score | High |

Fast Fact: AI-driven predictive maintenance can decrease equipment downtime by up to 50% and reduce maintenance costs by 40% compared to reactive approaches.

n8n Pricing Considerations

Cloud pricing for 2026 runs €50/month for Starter (10,000 executions), €100/month for Pro (50,000 executions), and €300/month for Business (500,000 executions). Self-hosting? The software costs nothing—it's open source. But don't kid yourself about total cost. Servers, monitoring, security patches, and someone to manage it all. Budget €200/month minimum for a production-grade self-hosted setup. Probably more.

Preparing Your Data for Python Automation

Python gives you maximum control. Full stop. If n8n resembles a well-stocked toolkit, Python is an entire machine shop. You can fabricate virtually anything. But you need skilled machinists, and you'll spend more time on fundamentals that n8n handles automatically.

What Python-Based Automation Requires

- Database Connectivity: Direct access to your data warehouse or time-series database. PostgreSQL, InfluxDB, and TimescaleDB are common choices.

- Industrial Protocol Libraries: For direct PLC communication, libraries like

pymodbusoropcuahandle the heavy lifting. - Structured Data Exports: Even if real-time access isn't possible, regular exports to CSV or Parquet files enable batch processing.

- Documentation: Python developers need data dictionaries explaining what each field means, its units, valid ranges, and update frequency.

The Python Ecosystem for Manufacturing

- Pandas: Data manipulation and analysis

- NumPy: Numerical computation

- scikit-learn: Machine learning models for predictive maintenance, quality prediction

- Apache Airflow: Workflow orchestration for complex data pipelines

- TensorFlow/PyTorch: Deep learning for computer vision quality inspection

n8n vs Python: When to Use Each

| Scenario | Best Choice | Reasoning |

|---|---|---|

| Simple system-to-system sync | n8n | Visual design, pre-built connectors, faster deployment |

| Complex ML model integration | Python | Full library access, custom algorithms |

| Event-driven alerts | n8n | Built-in MQTT support, easy conditional routing |

| Large-scale data processing | Python (Airflow) | Better handling of millions of records |

| Hybrid workflows | Both | n8n orchestrates, Python handles heavy computation |

In practice, we often combine both at Flexi IT. n8n manages orchestration—triggering workflows, routing decisions, connecting systems. Python code nodes embedded within those workflows handle the heavy analytical lifting. Best of both worlds, honestly.

Common Data Organisation Mistakes to Avoid

We've implemented AI automation in factories across Europe. Dozens of projects. Different industries, different scales, different legacy systems. The same mistakes appear everywhere. Here's what keeps going wrong—and how to sidestep it.

1. Inconsistent Timestamps

One system logs UTC. Another uses local time—a third stores Unix epoch values. Try correlating sensor readings with production events across these formats. You'll get nonsense. Or worse—plausible-looking nonsense that nobody questions until it's too late. Solution: Standardise on UTC with ISO 8601 format (2026-01-15T14:30:00Z). Everywhere. No exceptions.

2. Missing Context

A temperature reading of "87" tells you almost nothing. Celsius? Fahrenheit? Which sensor? What's the acceptable range? Without metadata, raw numbers become guessing games. Solution: Use OPC UA for semantic richness, or mandate that every data point carries its metadata. Unit. Source. Confidence. Expected bounds.

3. No Historical Retention Policy

Some factories hoard everything indefinitely. Storage costs balloon. Query performance tanks. Other factories purge too aggressively and discover, six months later, that they've deleted training data essential for rebuilding an ML model. Solution: Define retention tiers. Raw data for 30 days. Aggregated data for 2 years. Summary statistics forever. Adjust based on actual analytical needs.

4. Siloed Data Ownership

Maintenance owns vibration data. Quality owns inspection records. Operations owns throughput numbers. Each department guards its territory. Nobody assembles the complete picture. Predictive models starve for want of correlated inputs. Solution: Build a data governance framework. Clear ownership, yes—but shared access. Cross-functional visibility isn't optional for serious AI work.

5. Over-Engineering Real-Time Requirements

Someone decides everything must stream in milliseconds. Infrastructure costs explode. Complexity multiplies. Then you realise half those metrics only matter in daily reports anyway. Solution: Match data velocity to genuine business need. Real-time for safety and quality decisions. Batch for everything else.

Key Terms Glossary

| Term | Definition |

|---|---|

| ISA-95 | International standard for integrating enterprise and control systems, defining a 5-level hierarchy from physical processes to business planning. |

| MES (Manufacturing Execution System) | Software that tracks and documents the transformation of raw materials to finished goods, operating at ISA-95 Level 3. |

| MQTT | Lightweight publish-subscribe messaging protocol designed for IoT and industrial applications. |

| OPC UA | Industrial communication protocol that provides semantic context, security, and complex data type support. |

| Unified Namespace (UNS) | Architecture pattern where all systems publish to and subscribe from a central broker, creating a single source of truth. |

| PLC (Programmable Logic Controller) | Industrial computer that controls manufacturing processes and equipment at the shop-floor level. |

| SCADA | Supervisory Control and Data Acquisition—systems that monitor and control industrial processes. |

Summary: Preparing Your Factory Data for Automation

- Adopt ISA-95 hierarchy: Organise data into the five standard levels, focusing automation efforts on Levels 2-4.

- Standardise naming conventions: Use consistent, descriptive identifiers across all systems (e.g.,

CNC-LINEA-001). - Implement layered schemas: Raw → Normalised → Presentation layers enable both flexibility and performance.

- Expose APIs: REST endpoints with JSON output are essential for n8n; direct database access helps Python workflows.

- Choose the right velocity: Real-time streaming for safety and quality; batch processing for reporting and analytics.

- Consider Unified Namespace: For greenfield projects or major upgrades, UNS simplifies integration dramatically.

- Document everything: Data dictionaries, schema diagrams, and protocol specifications save weeks of discovery time.

Ready to Automate Your Production Data?

Organised data isn't glamorous. It won't make headlines. But it's the difference between automation projects that deliver and ones that collapse under their own complexity. At Flexi IT, we bridge shop-floor reality and modern automation platforms every day—whether that means architecting data structures, building n8n workflows, or writing custom Python solutions for European manufacturers.

We don't just build automations. We build the foundations that make them work—planning an AI integration for manufacturing this year? Let's talk about getting your data ready first.